Advanced Techniques and Applications for building Unified Namespaces

This article provides a demonstration of creating a simple Unified Namespace using the Intelligence Hub.

What Does This Article Cover?

This article will provide a sample Intelligence Hub project file for viewers to import, demonstrating key functionality when building Unified Namespaces using the Intelligence Hub.

- Preparation

- Use Case: Replicating OPC UA Namespaces

- Use Case: Templating Asset Data to a Transform Pipeline

- Use Case: Templating Asset Data to a Buffer Pipeline

- Use Case: Templating and Event Based Flows

- Use Case: Connecting and Conditioning ERP Data

- Summary

Preparation

-

It is recommended to install the Intelligence Hub as close to the data source(s) as possible, this will ensure reliable access to the source data and enable the usage of Store and Forward. If the factory network has communication issues to the output target, Intelligence Hub will buffer the data locally to a SQLite database and forward the data when the output target is healthy again. This is a key benefit to deploying Intelligence Hub at the edge.

-

Enable Intelligence Hub MQTT broker

- In the left-hand navigation panel, navigate to Manage, and click Settings

- In the Application tab and under the MQTT Broker section enable the broker, if ports 1885 and 1886 are in use on the server hosting your Intelligence Hub, update to ports of your choosing, otherwise accept the defaults and click the Save button at the top of the page.

-

Import the required Project

- Download the project file using the link below.

- In the left-hand navigation panel, navigate to Manage, and click Project.

- Within the Import tab, ensure Full Project is off (otherwise your existing project will be overwritten).

- Choose the downloaded file using the Project box and click the Import button.

-

Update the imported Connections as required

-

Navigate to Configure and click Connections, Click Example_UNS_MQTT and update the MQTT settings as required based on the prior preparation step. The Port value must match what was entered for the MQTT Broker settings.

-

Navigate to Configure and click Connections, Click “Example_UNS_SQL” and type the following within the password field and click the Save button.

password

-

-

Setup UNS Client

- In the left-hand navigation panel, navigate to Tools and right click UNS client and open Link in New Tab.

- Enter login information.

- For Connection select "Example_UNS_MQTT", leave the default Client ID and Subscribed Topics (wildcard #).

- Click the Connect button.

- Confirm UNS client says "Example_UNS_MQTT".

- Return to the previous web browser tab.

Use Case: Replicating OPC UA Namespaces:

This use case showcases how Intelligence Hub can easily replicate existing source systems to a Unified Namespace. We are not modeling the data, we are replicating the source OPC UA data to MQTT using Pipelines. Users may elect to output this data to a functional sub-topic named "Raw_Namespace" indicating to users this is unmodeled and unstructured.

- Navigate to Pipeline “Example_UNS_OPC_UA_Breakup” and the "Flow_Trigger" stage and note the three References are direct branches from the OPC_UA connection.

- Click one of the References. Click on the Test Input button and review the results.

OPC_UA “Branch” input type has two key settings: Max Branch Depth (many levels under the identifier to read) and Cache Interval (how often does the branch structure refresh to reflect new or removed tags).

-

Use the Input's Usage Tab to navigate back to Pipeline “Example_UNS_OPC_UA_Breakup”.

-

The third stage in Pipeline “Example_UNS_OPC_UA_Breakup” is a Flatten stage, this flattens any complex JSON hierarchies into a flatten object.

- Original

{ "_name": "Packaging", "_model": "ComplexData", "_timestamp": 1711634379957, "_Statistics": { "_FailedReads": 0, "_FailedWrites": 0, "_MaxPendingReads": 0, "_MaxPendingWrites": 0, "_NextReadPriority": 0, "_PendingReads": 0, "_PendingWrites": 0, "_Reset": false, "_RxBytes": 0, "_SuccessfulReads": 0, "_SuccessfulWrites": 0, "_TxBytes": 0 }, "_System": { "_Description": null, "_EnableDiagnostics": false, "_FloatHandlingType": "Replaced with zero", "_WriteOptimizationDutyCycle": 10 }, "InjectionMolding1": { "_System": { "_ActiveTagCount": 3, "_DemandPoll": false, "_Description": null, "_DeviceId": "1", "_Enabled": true, "_Error": false, "_NoError": true, "_ScanMode": "UseClientRate", "_ScanRateMs": 1000, "_SecondsInError": 0, "_Simulated": false }, "MachineID": "MACHINE1", "PressSpeed": 21.9,

"ProductID": 10003, "Temperature": 75, "TimeInOperation": 17568737 }, "InjectionMolding2": { "_System": { "_ActiveTagCount": 1, "_DemandPoll": false, "_Description": null, "_DeviceId": "1", "_Enabled": true, "_Error": false, "_NoError": true, "_ScanMode": "UseClientRate", "_ScanRateMs": 1000, "_SecondsInError": 0, "_Simulated": false }, "MachineID": "MACHINE2", "PressSpeed": 21.9, "ProductID": 10001, "Temperature": 24.44, "TimeInOperation": 17568737 }, "PLC1": { "_System": { "_ActiveTagCount": 6, "_DemandPoll": false, "_Description": null, "_DeviceId": "1", "_Enabled": true, "_Error": false, "_NoError": true, "_ScanMode": "UseClientRate", "_ScanRateMs": 1000, "_SecondsInError": 0, "_Simulated": false }, "LineState": "RUNNING", "MachineID": "2500", "TimeInOperation": 17568737, "UnitsCompletedPerHour": 35364600, "UnitsInRun": 35364500, "WorkOrderID": 100895 }, "PLC2": { "_System": { "_ActiveTagCount": 6, "_DemandPoll": false, "_Description": null, "_DeviceId": "1", "_Enabled": true, "_Error": false, "_NoError": true, "_ScanMode": "UseClientRate", "_ScanRateMs": 1000, "_SecondsInError": 0, "_Simulated": false }, "LineState": "RESETTING", "MachineID": "2518", "TimeInOperation": 17568737, "UnitsCompletedPerHour": 58885160, "UnitsInRun": 35364500, "WorkOrderID": 100515 }, "PLC3": { "_System": { "_ActiveTagCount": 7, "_DemandPoll": false, "_Description": null, "_DeviceId": "1", "_Enabled": true, "_Error": false, "_NoError": true, "_ScanMode": "UseClientRate", "_ScanRateMs": 1000, "_SecondsInError": 0, "_Simulated": false }, "LineState": "RESETTING", "MachineID": "2518", "TimeInOperation": 15810559, "UnitsCompletedTotalCount": 53022980, "UnitsInRun": 31846980, "UnitsRejectedTotalCount": 1594488, "WorkOrderID": 100515 } } -

Flattened{ "_name": "Packaging", "_model": "ComplexData", "_timestamp": 1711634379957, "_Statistics/_FailedReads": 0, "_Statistics/_FailedWrites": 0, "_Statistics/_MaxPendingReads": 0, "_Statistics/_MaxPendingWrites": 0, "_Statistics/_NextReadPriority": 0, "_Statistics/_PendingReads": 0, "_Statistics/_PendingWrites": 0, "_Statistics/_Reset": false, "_Statistics/_RxBytes": 0, "_Statistics/_SuccessfulReads": 0, "_Statistics/_SuccessfulWrites": 0, "_Statistics/_TxBytes": 0, "_System/_Description": null, "_System/_EnableDiagnostics": false, "_System/_FloatHandlingType": "Replaced with zero", "_System/_WriteOptimizationDutyCycle": 10, "InjectionMolding1/_System/_ActiveTagCount": 3, "InjectionMolding1/_System/_DemandPoll": false, "InjectionMolding1/_System/_Description": null, "InjectionMolding1/_System/_DeviceId": "1", "InjectionMolding1/_System/_Enabled": true, "InjectionMolding1/_System/_Error": false, "InjectionMolding1/_System/_NoError": true, "InjectionMolding1/_System/_ScanMode": "UseClientRate", "InjectionMolding1/_System/_ScanRateMs": 1000, "InjectionMolding1/_System/_SecondsInError": 0, "InjectionMolding1/_System/_Simulated": false, "InjectionMolding1/MachineID": "MACHINE1", "InjectionMolding1/PressSpeed": 21.9, "InjectionMolding1/ProductID": 10003, "InjectionMolding1/Temperature": 75, "InjectionMolding1/TimeInOperation": 17568737, "InjectionMolding2/_System/_ActiveTagCount": 1, "InjectionMolding2/_System/_DemandPoll": false, "InjectionMolding2/_System/_Description": null, "InjectionMolding2/_System/_DeviceId": "1", "InjectionMolding2/_System/_Enabled": true, "InjectionMolding2/_System/_Error": false, "InjectionMolding2/_System/_NoError": true, "InjectionMolding2/_System/_ScanMode": "UseClientRate", "InjectionMolding2/_System/_ScanRateMs": 1000, "InjectionMolding2/_System/_SecondsInError": 0, "InjectionMolding2/_System/_Simulated": false, "InjectionMolding2/MachineID": "MACHINE2", "InjectionMolding2/PressSpeed": 21.9, "InjectionMolding2/ProductID": 10001, "InjectionMolding2/Temperature": 24.44, "InjectionMolding2/TimeInOperation": 17568737, "PLC1/_System/_ActiveTagCount": 6, "PLC1/_System/_DemandPoll": false, "PLC1/_System/_Description": null, "PLC1/_System/_DeviceId": "1", "PLC1/_System/_Enabled": true, "PLC1/_System/_Error": false, "PLC1/_System/_NoError": true, "PLC1/_System/_ScanMode": "UseClientRate", "PLC1/_System/_ScanRateMs": 1000, "PLC1/_System/_SecondsInError": 0, "PLC1/_System/_Simulated": false, "PLC1/LineState": "RUNNING", "PLC1/MachineID": "2500", "PLC1/TimeInOperation": 17568737, "PLC1/UnitsCompletedPerHour": 35364600, "PLC1/UnitsInRun": 35364500, "PLC1/WorkOrderID": 100895, "PLC2/_System/_ActiveTagCount": 6, "PLC2/_System/_DemandPoll": false, "PLC2/_System/_Description": null, "PLC2/_System/_DeviceId": "1", "PLC2/_System/_Enabled": true, "PLC2/_System/_Error": false, "PLC2/_System/_NoError": true, "PLC2/_System/_ScanMode": "UseClientRate", "PLC2/_System/_ScanRateMs": 1000, "PLC2/_System/_SecondsInError": 0, "PLC2/_System/_Simulated": false, "PLC2/LineState": "RESETTING", "PLC2/MachineID": "2518", "PLC2/TimeInOperation": 17568737, "PLC2/UnitsCompletedPerHour": 58885160, "PLC2/UnitsInRun": 35364500, "PLC2/WorkOrderID": 100515, "PLC3/_System/_ActiveTagCount": 7, "PLC3/_System/_DemandPoll": false, "PLC3/_System/_Description": null, "PLC3/_System/_DeviceId": "1", "PLC3/_System/_Enabled": true, "PLC3/_System/_Error": false, "PLC3/_System/_NoError": true, "PLC3/_System/_ScanMode": "UseClientRate", "PLC3/_System/_ScanRateMs": 1000, "PLC3/_System/_SecondsInError": 0, "PLC3/_System/_Simulated": false, "PLC3/LineState": "RESETTING", "PLC3/MachineID": "2518", "PLC3/TimeInOperation": 15810559, "PLC3/UnitsCompletedTotalCount": 53022980, "PLC3/UnitsInRun": 31846980, "PLC3/UnitsRejectedTotalCount": 1594488, "PLC3/WorkOrderID": 100515 }

- Original

-

The fourth stage in Pipeline “Example_UNS_OPC_UA_Breakup” is a Breakup All stage, this takes the flattened branch object and breaks it up into individual key:value pairs.

- Within the final WriteNewTarget Stage, we are utilizing the following syntax to dynamically build out our topics based on the Breakup All Stage. {{event.metadata.breakupName}}

Use Case: Templating Asset Data to a Transform Pipeline

This use case outlines how to use Templating to model multiple sources of data together into a single payload. We will utilize a Transform Pipeline to show how you can transform the shape of the payloads to satisfy target systems' data structure needs. This Pipeline is designed to scale to many like asset payloads utilizing standard attribute names.

- Let’s start by reviewing our Dynamic Templating source. Navigate to Connections “Example_UNS_SQL”, click Inputs, open “Example_UNS_SQL_Input_Get_All_CNC_Machines_AssetIDs” and click the Test Input button.

This returns an array of our AssetID values, which in this use case can be used to template configurations from one to many data points. To take advantage of Templating, you will need uniformity in the source systems.

- Navigate to Instance “Example_UNS_Fanuc_Templated_Child”. Click the Test Instance button. Note that we have a Templated Instance with only one Template value “Fanuc_1001”, this single value is utilized for testing, we will dynamically drive Template parameters from our Flow.

- Within “Example_UNS_Fanuc_Templated_Child”, you can see we are dynamically driving the Template parameters to our OPC UA collection and SQL inputs.

- Navigate to Instance “Example_UNS_CNC_Machine”. Click the Test Instance button. Note that we have a Parameterized Instance mapped to our “ProcessData” attribute. This shows how to re-use Models/Instances.

- Navigate to Pipeline “Example_UNS_Asset_Transforms” and the "Flow_Trigger" stage. Note we are have Templating enabled with a dynamic source {{Connection.Example_UNS_SQL.Example_UNS_SQL_Input_Get_All_CNC_Machines_AssetIDs}}. We are dynamically driving the AssetID value to other configuration objects with the (AssetID={{this.AssetID}}) syntax within the References.

- Let’s review our first target. Note we are starting our Pipeline with a branch to WriteOriginal, which outputs the payload to the predefined Example_UNS_MQTT_Output_CNC_Original output. Click the WriteOriginal Target hyperlink {{Connection.Example_UNS_MQTT.Example_UNS_MQTT_Output_CNC_Original}} and review the Topic structure. You can see we are leveraging Dynamic Referencing for the ProcessData.AssetType and ProcessData.AssetID Attributes.

Dynamic Referencing is a key design pattern to enable use cases to quickly scale to other like-payloads. As long as our source payloads have attributes ProcessData.AssetType and ProcessData.AssetID, they can utilize this output.

-

Navigate back to Pipeline “Example_UNS_Asset_Transforms”. The other branch path has a next stage that goes to Flatten, which flattens our JSON hierarchy to a single object.

-

The next stage Transform utilizes stage.SetMetadata to enable us to reference the ProcessData_AssetID and ProcessData_AssetType values within our upcoming WriteNew stages.

Original: { "_name": "Fanuc_1002", "_model": "CNC_Machine_Simple_Model", "_timestamp": 1711635046385, "Enterprise": "Enterprise", "Site": "Site-1", "Area": "Area-1", "Line": "Line-1", "ProcessData": { "AssetID": "FANUC_1002", "AssetType": "CNC", "Temperature": 25.5576, "Temperature_UOM": "C", "CuttingSpeed": 101, "PowerCurrent": 208.4758758544922, "FeedRate": 13.97707748413086, "LastServiceDate": "2023-09-07T00:00:00.000Z", "LastServiceNotes": "Replaced spindle belt" } } Flattened: { "Enterprise": "Enterprise", "Site": "Site-1", "Area": "Area-1", "Line": "Line-1", "ProcessData_AssetID": "FANUC_1002", "ProcessData_AssetType": "CNC", "ProcessData_Temperature": 25.5576, "ProcessData_Temperature_UOM": "C", "ProcessData_CuttingSpeed": 101, "ProcessData_PowerCurrent": 208.4758758544922, "ProcessData_FeedRate": 13.97707748413086, "ProcessData_LastServiceDate": "2023-09-07T00:00:00.000Z", "ProcessData_LastServiceNotes": "Replaced spindle belt" } -

The next Stage publishes the transformed payload via the MQTT Broker.

Enterprise/Site-1/Area-1/Line-1/Assets/{{event.metadata.ProcessData_AssetType}}/{{event.metadata.ProcessData_AssetID}}/Flattened_Payload -

In our next Stage, Breakup_Payload, Breakup Type "All" breaks our JSON object of 13 attributes into 13 different payloads of individual events.

-

Within our final stage, Write_Broken_Up_Payload_To_MQTT, we utilize the syntax {{event.metadata.breakupName}} to create our individual topics based on the previous Breakup_Payload Stage.

1. Enterprise/Site-1/Area-1/Line-1/Assets/{{event.metadata.ProcessData_AssetType}}/{{event.metadata.ProcessData_AssetID}}/Broken_Up_Payload/{{event.metadata.breakupName}} -

From the UNS client, compare the following MQTT topics to review the differences.

In Intelligence Hub version 4.3.0, the "Transform" stage was renamed to the "JavaScript" stage.

Enterprise/Site-1/Area-1/Line-1/Assets/CNC/FANUC_1001/Original_Payload

Enterprise/Site-1/Area-1/Line-1/Assets/CNC/FANUC_1001/Flattened_Payload

Enterprise/Site-1/Area-1/Line-1/Assets/CNC/FANUC_1001/Broken_Up_Payload/#

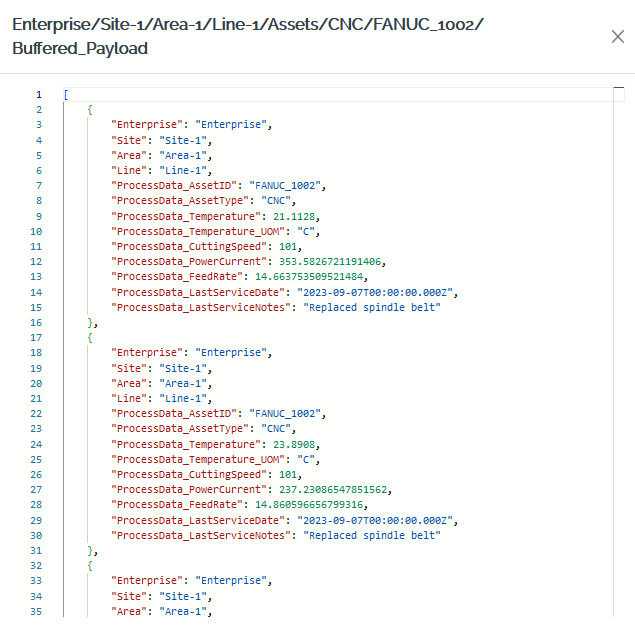

Use Case: Templating Asset Data to a Buffer Pipeline

This use case outlines how to use Pipelines to buffer incoming payloads over a set time or size window. This functionality simplifies machine learning use cases. Opposed to writing data every second to a cloud storage endpoint (such as AWS S3 or Azure Blob Storage), we can create a buffered array of data, incorporate file formatting changes or compression, then output to the storage endpoint. This Pipeline is designed to scale to many like-asset payloads utilizing standard attribute names.

-

Navigate to Pipeline, “Example_UNS_Asset_Buffer”.

-

The first stage, Flatten, flattens our JSON hierarchy to a single object.

Original: { "_name": "Fanuc_1002", "_model": "CNC_Machine_Simple_Model", "_timestamp": 1711561066790, "Enterprise": "Enterprise", "Site": "Site-1", "Area": "Area-1", "Line": "Line-1", "ProcessData": { "AssetID": "FANUC_1002", "AssetType": "CNC", "Temperature": 21.3906, "Temperature_UOM": "C", "CuttingSpeed": 101, "PowerCurrent": 241.2214813232422, "FeedRate": 14.231868743896484, "LastServiceDate": "2023-09-07T00:00:00.000Z", "LastServiceNotes": "Replaced spindle belt" } } Flattened: { "Enterprise": "Enterprise", "Site": "Site-1", "Area": "Area-1", "Line": "Line-1", "ProcessData_AssetID": "FANUC_1002", "ProcessData_AssetType": "CNC", "ProcessData_Temperature": 25.94651830444336, "ProcessData_Temperature_UOM": "C", "ProcessData_CuttingSpeed": 101, "ProcessData_PowerCurrent": 389.9405212402344, "ProcessData_FeedRate": 13.844867706298828, "ProcessData_LastServiceDate": "2023-09-07T00:00:00.000Z", "ProcessData_LastServiceNotes": "Replaced spindle belt" } -

The next Stage, Transform_Set_Metadata, uses stage.setMetadata to store multiple source attributes for use within our upcoming Stages.

-

The next Stage, Time_Buffer_60_Seconds, is a Time Buffer Stage to create an array of our events over the period of 60 seconds. The flow trigger's interval is also relevant here. The Pipeline is obtaining data every 10 seconds.

Take note of the Time Buffer Stage Window Expression: stage.setBufferKey(event.value.ProcessData_AssetID). Here we are utilizing ProcessData_AssetID from our earlier Transform stage as a unique "BufferKey" identifier to route into separate buffers. This will ensure the array payloads are separated per AssetID opposed to one large payload that mixes the AssetIDs.

- Our final stage Write_Buffered_Payload_To_MQTT is a WriteNew Stage that utilizes our event.metadata references to built an appropriate topic structure for output the buffered payloads to our namespace.

- Navigate to UNS client, topic Enterprise/Site-1/Area-1/Line-1/Assets/CNC/FANUC_1001/Buffered_Payload, and review the results.

Write New Syntax: {{event.metadata.Enterprise}}/{{event.metadata.Site}}/{{event.metadata.Area}}/{{event.metadata.Line}}/Assets/{{event.metadata.ProcessData_AssetType}}/{{event.metadata.bufferKey}}/Buffered_Payload

Example Output:

Enterprise/Site-1/Area-1/Line-1/Assets/CNC/FANUC_1001/Buffered_Payload

Use Case: Templating and Event Based Flows

This use case outlines how to use an Event type Flow to monitor an OPC UA MachineState for changes to control Output writes to the Target(s). This use case utilizes Templating to handle this Event driven Flow for multiple like-payloads using dynamic parameterization.

-

Let’s start by reviewing our Dynamic Templating Source. Navigate to Connection “Example_UNS_SQL”. Click Inputs. Open “Example_UNS_SQL_Input_Get_All_CNC_Machines_AssetIDs” and click the Test Input button.

1. This returns an array of our AssetID values, which in this use case can be used to template configurations from one to many data points.

-

Navigate to Pipeline “Example_UNS_CNC_Event_Based” and review the usage of Templating. You can see the AssetID values from our SQL source are being dynamically driven into the Sources reference and the Event expression.

- The event Expression is listening for any changes to the templated OPC UA tag Input “Connection.Example_UNS_OPC_UA.Example_UNS_OPC_UA_Input_Fanuc_CNC_MachineState_Templated”. If you review the payload topic (Enterprise/Site-1/Area-1/Line-1/On_State_Change/) in the UNS client, you should see Fanuc_1002 and Fanuc_1003 update frequently with a new value to MachineState. Whereas Fanuc_1001 MachineState is not changing and therefore not updating.

Use Case: Connecting and Conditioning ERP Data

This use case outlines how to connect ERP data over a REST connection, where we utilize a Condition to convert a complex REST input into a workable payload.

- Navigate to Connection “Example_UNS_SAP_REST_Client”, click Inputs and select “Example_UNS_SAP_Input_Get_Maintenance_Orders”. Click Test Input and review the results.

Let’s assume for this use case we are only interested in the following attributes per work order: MaintenanceOrder, MaintenanceOrderDesc, MainWorkCenter and EquipmentName. This data lives in a nested “results” attribute. Let's use Condition and Modeling to extract only what what’s relevant to our use case.

- Use the Input's Usage tab to navigate to Conditions “Example_UNS_Condition_SAP_Result” and review the Custom Condition type.

- Within Condition “Example_UNS_Condition_SAP_Result”, in the top right, select the Source and click the Test Condition button to review the results.

We are utilizing a basic JavaScript expression to return the "results" attribute. De-referencing the property from the parent structure and creating a reference component to it directly will enable the usage of Expand Arrays within the Instance.

-

Navigate to Instances “Example_UNS_Maintenance_Order”, perform a Test Instance and review the results.

The conditioned object returns an array of data. We can now utilize the Expand Arrays feature. This enables us to map attributes from array element [0] and expand the array to the last element within a single Instance.

- Use the Instance's Usage tab to navigate to Pipeline "Example_UNS_Maintenance_Order".

- Enable the Pipeline "Example_UNS_Maintenance_Order".

-

Review this data within your UNS client within topic Enterprise/Site-1/Area-1/Line-1/Maintenance/#

Summary

This reference article provided you with a comprehensive overview of advanced functionality the Intelligence Hub provides to build and scale Unified Namespaces. We reviewed five different use cases within this project that can be referenced when building your own Unified Namespace. Use these techniques and design patterns to help accelerate and demonstrate the need for industrial DataOps in your own environment.